In the rapidly evolving world of Generative AI, one of the biggest challenges has been “hallucination”—models confidently inventing facts. This is especially critical when dealing with real-world data like travel routes, distances, and live traffic.

Enter Grounding.

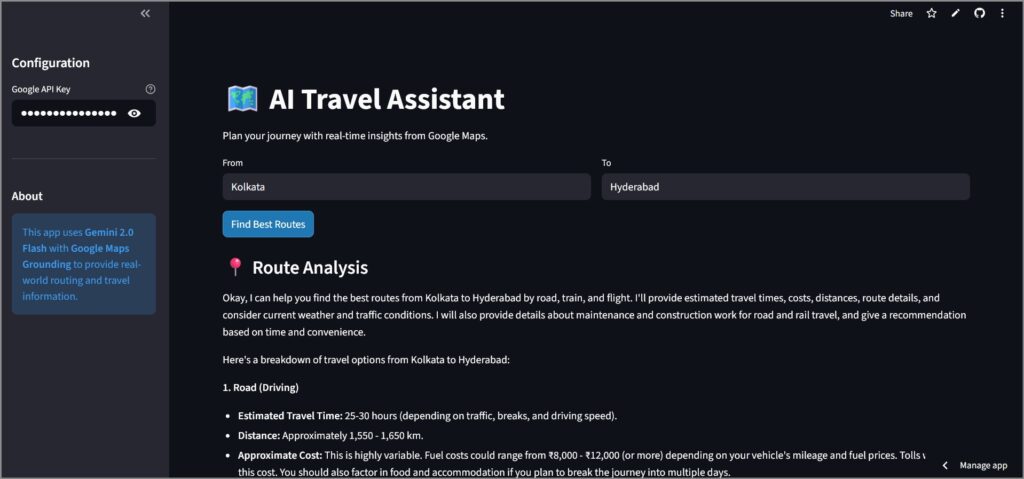

In this post, we’ll explore how to build a robust AI Travel Assistant using the new Gemini 2.0 Flash model and Google Maps Grounding. We’ll walk through the code, explain what “grounding” actually means in this context, and discuss when you should use it.

What is Google Maps Grounding?

Grounding is the process of connecting a model’s generation to verifiable sources of information. When we talk about Google Maps Grounding, we mean giving the AI model direct access to Google Maps data to answer spatial and location-based queries.

Instead of relying on its training data (which is static and outdated the moment it’s trained), the model can query Google Maps in real-time to get:

- Live Traffic Conditions: Is there a jam on the highway?

- Accurate Routing: What’s the actual driving distance right now?

- Place Details: Ratings, reviews, and operating hours.

- Transit Schedules: Real train and bus timings.

Why Use It?

- Accuracy: Eliminates hallucinations about distances and travel times.

- Freshness: Access to real-time data like traffic and construction delays.

- Context: The model understands “places,” not just text strings.

When to use Grounding with Google Maps

Grounding with Google Maps is ideal for applications that require accurate, up-to-date, and location-specific information. It enhances the user experience by providing relevant and personalized content backed by Google Maps’ extensive database of over 250 million places worldwide.

You should use Grounding with Google Maps when your application needs to:

- Provide complete and accurate responses to geo-specific questions.

- Build conversational trip planners and local guides.

- Recommend points of interest based on location and user preferences like restaurants or shops.

- Create location-aware experiences for social, retail, or food delivery services.

Grounding with Google Maps excels in use cases where proximity and current factual data are critical, such as finding the “best coffee shop near me” or getting directions.

The Code: Building the Assistant

We’ve built a simple yet powerful Streamlit application that takes a source and destination and provides a comprehensive travel plan.

Prerequisites

- Python 3.9+

streamlitgoogle-genaiSDK

Step 1: Setup and Configuration

First, we import the necessary libraries and configure the Streamlit page. We’re using the google-genai SDK, which simplifies interacting with the Gemini API.

import streamlit as st

import os

from google import genai

from google.genai import types

st.set_page_config(

page_title="Google Maps Assistant (GenAI)",

page_icon="🗺️",

layout="wide"

)

Step 2: The Power of the Prompt

The core of this application isn’t complex routing algorithms—it’s the prompt. Because the model has access to the Google Maps tool, we can ask it complex questions in natural language.

In our app, we ask for:

- Routes by Road, Train, and Flight.

- Estimates for time, cost, and distance.

- Live Conditions: Weather, traffic, and maintenance work.

prompt = f"""

I want to travel from {source} to {destination}.

Please find the best routes by:

1. Road (Driving)

2. Train (Transit)

3. Flight (if applicable)

For each mode, provide:

- Estimated travel time and cost

- Distance

- Key route details or transit lines

- Also, add details of weather conditions and traffic conditions

- For road and rail travel, give additional details about maintenance and construction work

Finally, give a recommendation on the best option based on time and convenience.

"""

Step 3: Invoking Gemini with Tools

This is the magic step. We initialize the genai.Client and call generate_content. The crucial part is the tools configuration.

client = genai.Client(api_key=api_key)

response = client.models.generate_content(

model='gemini-2.0-flash',

contents=prompt,

config=types.GenerateContentConfig(

tools=[types.Tool(google_maps=types.GoogleMaps())]

)

)

By passing types.Tool(google_maps=types.GoogleMaps()), we give the model permission to query Google Maps. It determines when to call the tool based on the user’s prompt.

Step 4: Displaying the Result

The response from Gemini is already a natural language summary grounded in the data it fetched. We simply display it in our app.

st.markdown("### 📍 Route Analysis")

st.markdown(response.text)

When to Use Google Maps Grounding?

This feature is a game-changer for specific verticals. Here is where it shines:

1. Travel & Hospitality

- Itinerary Planning: “Plan a 3-day trip to Paris with museums in the morning and vegan restaurants nearby.”

- Concierge Services: “How far is the airport from my hotel during rush hour?”

2. Logistics & Operations

- Rough Estimation: Quickly estimating delivery times or distances for ad-hoc queries without setting up complex routing engines.

- Contextual Alerts: “Are there any road closures on the usual route to the warehouse?”

3. Real Estate

- Neighborhood Analysis: “What schools and parks are within a 10-minute walk of this address?”

Conclusion

By combining the reasoning capabilities of Gemini 2.0 with the real-world data of Google Maps, we can build applications that are not just smart, but aware. They understand the physical world, making them infinitely more useful for daily tasks.

The code for this assistant is surprisingly simple, proving that the next generation of AI apps will be defined by how well we can orchestrate tools, rather than how complex our own algorithms are.

Check out the app here – https://gemini-app-maps-assistant.streamlit.app/

Check out the Github repo here – https://github.com/sethlahaul/gemini-google-maps-assistant